Win a $50 Gift Card! 🎉

Subscribe now to enter our monthly lucky draw. Winner announced in 30 days.

Learn AI in 5 minutes a day.

Level up your AI knowledge with the latest news, clear explanations of why it matters, and practical tips for applying it to your work. Join a community of learners exploring the world of AI

Latest Newsletters

ALL NEWSLETTERS →Latest AI Articles

ALL Articles →

Model Context Protocol (MCP) vs Function Calling: A Deep Dive into AI Integration Architectures

Model Context Protocol (MCP) vs Function Calling: A Deep Dive into AI Integration Architectures

Read more

An In-Depth Guide to Firecrawl Playground: Exploring Scrape, Crawl, Map, and Extract Features for Smarter Web Data Extraction

An In-Depth Guide to Firecrawl Playground: Exploring Scrape, Crawl, Map, and Extract Features for Smarter Web Data Extraction

Read more

Meta AI Released the Perception Language Model (PLM): An Open and Reproducible Vision-Language Model to Tackle Challenging Visual Recognition Tasks

Meta AI Released the Perception Language Model (PLM): An Open and Reproducible Vision-Language Model to Tackle Challenging Visual Recognition Tasks

Read more

LLMs Can Now Learn to Try Again: Researchers from Menlo Introduce ReZero, a Reinforcement Learning Framework That Rewards Query Retrying to Improve Search-Based Reasoning in RAG Systems

LLMs Can Now Learn to Try Again: Researchers from Menlo Introduce ReZero, a Reinforcement Learning Framework That Rewards Query Retrying to Improve Search-Based Reasoning in RAG Systems

Read more

LLMs Can Now Solve Challenging Math Problems with Minimal Data: Researchers from UC Berkeley and Ai2 Unveil a Fine-Tuning Recipe That Unlocks Mathematical Reasoning Across Difficulty Levels

LLMs Can Now Solve Challenging Math Problems with Minimal Data: Researchers from UC Berkeley and Ai2 Unveil a Fine-Tuning Recipe That Unlocks Mathematical Reasoning Across Difficulty Levels

Read more

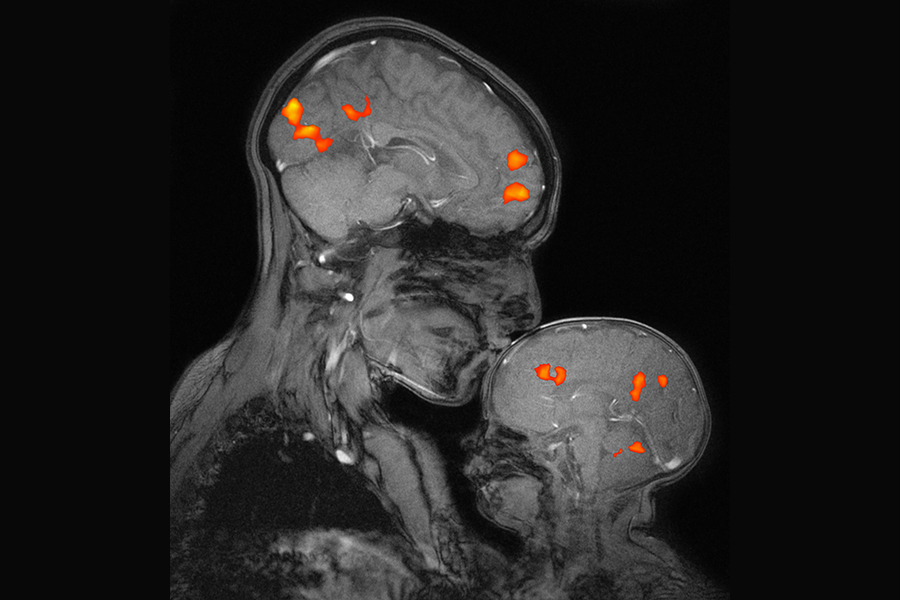

MIT’s McGovern Institute is shaping brain science and improving human lives on a global scale

A quarter century after its founding, the McGovern Institute at MIT reflects on its discoveries in the areas of basic neuroscience, neurotechnology, artificial intelligence, brain-body connections, and therapeutics.

Read moreTop AI Tools

10000+ AI Tools →

CopilotKit - Build Copilots 10x Faster

CopilotKit is the simplest way to integrate production-ready Copilots into any product.

Read more

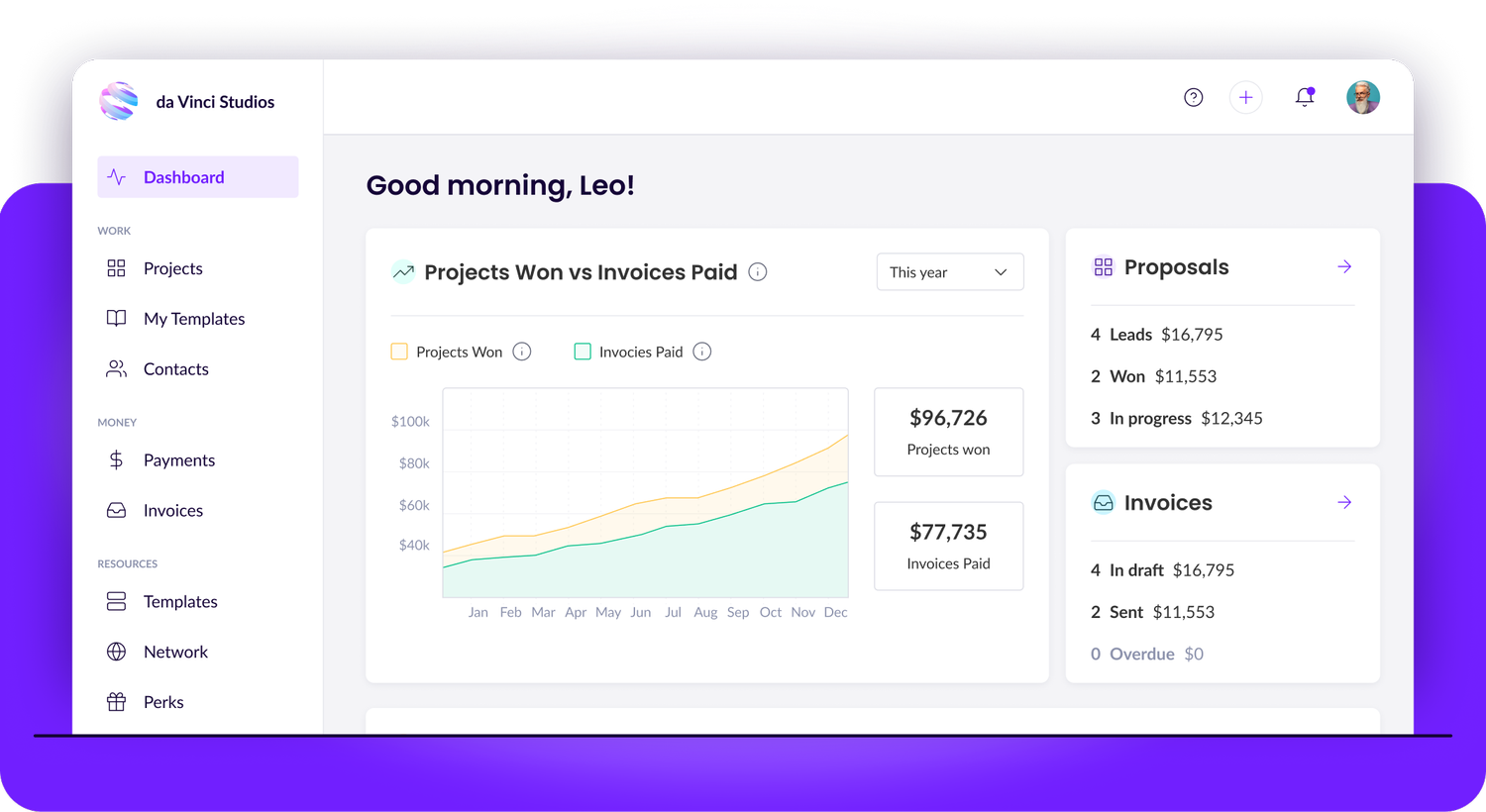

Wethos | Proposals, Invoices, and Teammates All-In-One Place

Wethos is a trusted software platform that helps freelancers, creative studios and agencies create proposals, send invoices, and collaborate with teammates. Explore the new Wethos AI today.

Read morepromptmate.io: Build AI-Powered Apps (ChatGPT, Google, ...

Build AI Powered Apps to speed up your processes. Combine different AI Sytems, bulk processing for superior efficiency, and effectiveness.

Read more

Upscale Image for Stunning Visuals with AI | Enhance photos upto 4K Resolution

Upscale your images with our AI-powered upscaler. Increase resolution, improve quality, and restore old photos online!

Read more

Enterprise AI software for teams between 2 and 5,000 | Team-GPT

Team-GPT helps companies adopt ChatGPT for their work. Organize knowledge, collaborate, and master AI in one shared workspace. 100% private and secure.

Read more